By: Orasi; Rob Jahn, Technical Partner Manager of Dynatrace; and Paul Bruce, Director of Customer Engineering of Neotys

I, Rob Jahn recently joined two industry veterans and Dynatrace partners, Orasi, and Paul Bruce of Neotys, as panelists to discuss how performance engineering and test strategies have evolved as it pertains to customer experience. This blog summarized our great conversation for the posed questions.

But before I do that, I must also give a shout-out to City Brew Tours who supplied all the attendees and panelists and led us through a beer and cheese tasting. Keep reading and I will share our favorite pairings!

What trends are you seeing in the industry?

We see every industry feeling the pressure to respond to the unrelenting customer demand for full-service web and mobile channels to transact. Company brands are now measured by the “app” and “app experience” and expect every application to be as fast as Google. During this COVID pandemic, organizations that lack processes and systems to scale and adapt to remote workforces and increased online shopping are especially feeling the pressure.

Many organizations recognize that addressing customer demand requires multiple different transformations to accelerate time to market and provide first class user experience. Change starts by thoroughly evaluating whether the current architecture, tools and processes for configuration, infrastructure, code delivery pipelines, testing, and monitoring – enable improved customer experience faster and with high quality or not.

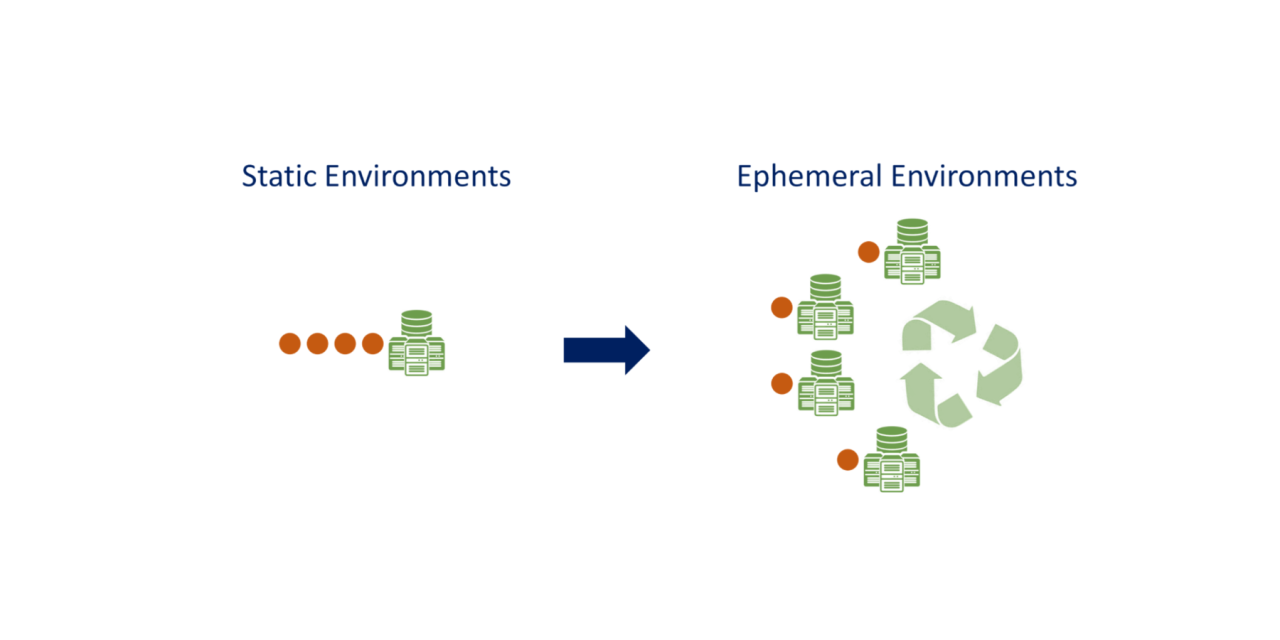

Organizations we see changing are increasing investment in their service offerings, adopting Scaled Agile Frameworks (SAFe) to deliver incremental value and increase productivity and putting DevOps principals and culture at their cornerstone. Rethinking the process means digital transformation. That is, modernizing legacy monolithic applications to micro-service architectures that use Cloud infrastructure and services and are build, deployed and operating with “Everything as code”.

We see successful teams advocating the mindset and approach to manage complexity by making smaller, more precise changes – think microservices over monolithic systems – and ruthless investment in automating common, toilsome tasks. See “A Philosophy of Software Design” by John Ousterhoust for more reading on this philosophy. However, starting over to “build it right from the beginning” and establishing new separate teams may be warranted too.

What do you see as the biggest challenge for performance and reliability?

Biz and tech are getting more complex, not less. This complexity comes in the form of new technologies like micro services and containers, heavy use of 3rd party Integrations, and distributed transactions across multiple cloud environments and data centers. All this complexity raises the bar for the end user and application monitoring and for many organizations their existing tooling poses challenges such as:

- A disconnect from actual End User experience and typical IT metric sources of logs, metrics, and traces.

- Different teams have their own siloed monitoring solution without sharing access.

- Traditional monitoring tools don’t scale to dynamic cloud environments, support new technologies, provide distributed tracing nor have an Application Programming Interface (API) to integrate testing and workflow platforms.

Another challenge comes from the delays created by the hand-offs between development teams and the traditionally organizational function of a Performance Center of Excellence (COE). Although these COE teams have highly skilled, subject matter experts, with rigorous processes, and controls over the tools and testing solutions – these centralized models do not scale to meet the needs for ever growing distributed Agile teams.

As a counterpoint to the COE challenges, we also observe some challenges within independent Agile development teams:

- Non-functional requirements (NFR) are not being captured earlier in the software development lifecycle (SDLC) due to the disconnect with their operational team counter parts or lack of expertise and access to data.

- Constantly reinventing wheels with a “Not Invented Here” bias. Teams who fall prey to this mentality often simply don’t apply known good practices consistently. We’ve heard, “let’s do some chaos engineering…” before exercising basic practices like load testing and proper system monitoring – both of which are required components of chaos engineering.

What are the key requirements to address these challenges?

It helps to think of the requirements from a “three body problem” angle solving the People, Process and Technology perspectives of where you are and where you aspire to be in the future. If you just try to solve for a technology change without addressing how it affects the people and processes in place (or vice versa), you’ll not see true success.

If you want to change the process – like move performance testing to continuous automation – you’ll need the right tools, but also, you’ll need to engage, enable, and embed with other teams outside your own subject-matter-experts (SMEs) group.

For the people angle, the requirement for Quality and Engineering managers and VPs who want to materially improve their systems and performance engineering approach is to make time for learning new tools and skills (technology), automating toil (out of their processes), and delivering training (to product teams). In highly successful performance engineering, automation has a strategic plan, aligned with the priorities of the business (critical-path systems) and to the customer experience (key workflows that directly affect revenue).

For the technology angle, providing a single source of truth for Biz, Dev, and Ops teams should be viewed as a strategic investment and enabler. The solution needs to connect the customer experience to the backend resource utilization and performance bottlenecks for scenarios such as:

- Employees working from home.

- Business events like a marketing campaign.

- Increased reliance on 3rd Party Integrations for payment processing, supply chain, or media content.

The solution needs to support and adapt to complex and dynamic environments end to end from mobile, to Cloud, to mainframe. Lastly, the solution must auto discover scale and automate the analysis for the volumes of data involved.

Another key requirement is enabling development teams to be self-service, while still following standard operating procedures and within the guardrails of your governance model, by providing shortened feedback loops to developers for performance testing and releases.

With “shift left” and enablement being a key to transformation, where are the key areas of focus to ensure success?

A proven process for transformation is a current state assessment of people, process, and technology followed by a future state vison and roadmap. This starts with engaging all key-stakeholders, to understand their business need, business drivers, and identify gaps. Then, using a design thinking process, ideate on the future and establish the business vision. Building a protype is a great way to quickly kick-off an initiative and show proof-of-value for a new tool or processes.

As you start automating some of your performance practices – like load testing, environment provisioning, configuration comparisons, monitoring – you’ll want to start with the “right early customers”…one or two product teams that are looking for fast, reliable feedback on API performance and who have a positive, collaborative attitude towards your assistance. As you walk the journey with them, you’ll learn lessons and tweak your approach, usually building out reusable pipelines and infrastructure logistics.

These early successes lead to better relationships between product teams and performance SMEs and should be regularly used by quality or practice managers to advocate for modernizing performance in other teams so that the next round of candidate internal customers can be chosen. Usually, when organizations with a large number of products and projects see the value coming from this iterative improvement, eventually a Senior VP or CTO says “why isn’t this adopted across the whole organization?”, by which time you have gone through numerous iterations and improvement cycles so that your performance automation is scalable and produces highly valuable feedback to product teams who espouse it.

In continuous delivery, software is broken into smaller components and each build should be validated. Automated data collection and scoring of response times, resource consumption like HTTP or database calls and resource utilization Is achievable today with observability platforms like Dynatrace and NeoLoad that provides APIs and support use cases such as automated quality gates within the open source project Keptn and performance testing as a self-service.

So, what was our favorite beer and cheese pairing?

I was a huge fan of the City Brew Tours IPA, and as a New Englander, I thought the Vermont Governors cheddar was excellent. Syed’s favorite beer and cheese combo was the Yacht Rocker Hazy IPA and Smoked Windsordale. And if you want to get Paul a round, get him the Kolsh and the Siracha Windsordale!